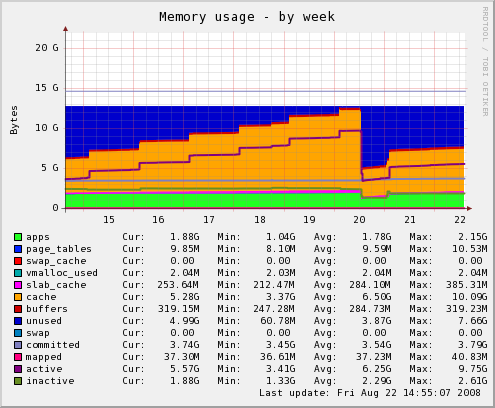

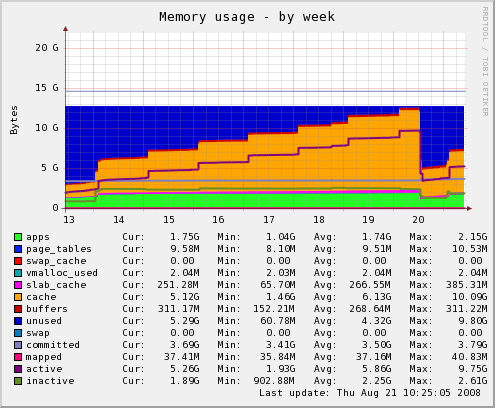

According to these Munin memory graphs, the large orange area is the OS buffer cache – a buffer the OS uses to cache plain ol’ file data on disk. The graph below shows one of our web servers after we upgraded its memory.

It makes sense that most of the memory not used by apps would be used by the OS to improve disk access. So seeing the memory graphs filled with orange is generally a good thing. After a few days, I watched the orange area grow and thought, “Great! LInux is putting all that extra memory to use.” I thought in my head that maybe it was caching images and CSS files to serve to Apache. But was that true?

Looking At A Different Server

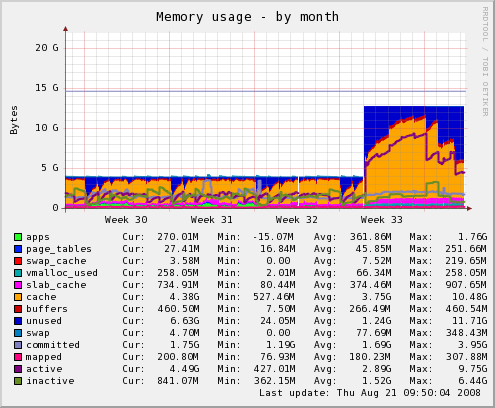

Here is a memory graph from one of our database servers after the RAM upgrade.

Again, I first thought that the OS was caching all that juicy database data from disk. The problem is that we don’t have 12GB of data, and that step pattern growth was suspiciously consistent.

Looking again at the web server graph, I saw giant downward spikes of blue color, where the buffer cache was emptied. (The blue is unused memory.) These occurred every day at 4 am, and on Sundays there’s a huge one. What happens every day at 4 am? The logs are rotated. And on Sundays, the granddaddy log of them all – the Apache log – is rotated.

The Problem

It was starting to make sense. Log files seem to take up most of the OS buffer cache on the web servers. Not optimal, I’m sure. And when they’re rotated, the data in the cache is invalidated and thus freed.

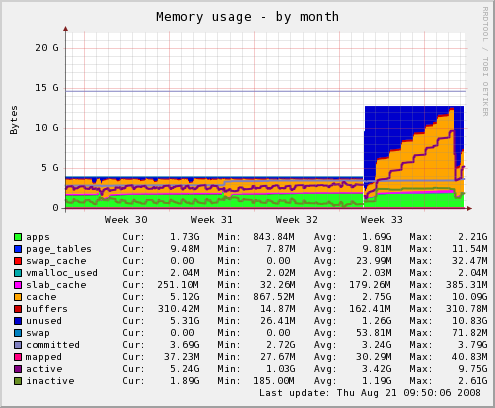

Here is a memory graph for one of our other database servers.

That step pattern growth is missing! In fact, most of RAM is unused. What is the difference between the first database server and this one? The first has the `mysqldump` backup. It occurs every night at 2:30 am, right when those step changes occur on its memory usage graph.

It was clear to me that most of the OS buffer cache was wasted on logs and backups and such. There had to be a way to tell the OS not to cache a file.

The Solution

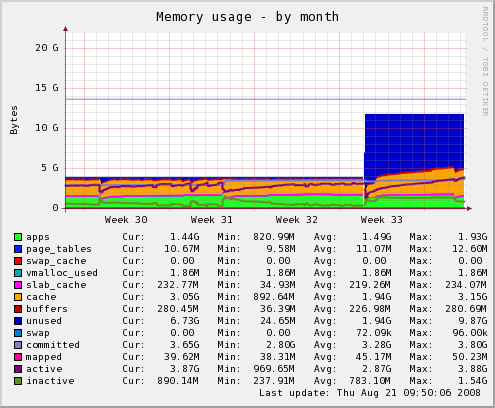

Google gave me this page: Improving Linux performance by preserving Buffer Cache State. I copied the little C program into a file and ran it on all the `mysqldump` backups. Here is the what happened to the memory usage.

Quite a bit of buffer cache was freed. On that night’s backup, I logged the buffer cache size before the backup and after.

% cat 2008.08.21.02.30.log

Starting at Thu Aug 21 02:30:03 EDT 2008

=========================================

Cached: 4490232 kB

Cached: 5350908 kB

=========================================

Ending at Thu Aug 21 02:30:55 EDT 2008

Just under a gigabyte increase in buffer cache size. What was the size of the new backup file?

% ll 2008.08.21.02.30.sql

-rw-r--r-- 1 root root 879727872 Aug 21 02:30 2008.08.21.02.30.sql

About 900MB.

Did It Work?

I used the C program on that page to ensure no database backups were cached by the OS. I did the same on the web servers in the logrotate config files. A couple days later, I checked the memory graph on the database server that performed the backup. Notice how the buffer cache did not fill up. It looked like the program worked, and the OS was free to cache more important things.