View counts, click counts, hit counts, traffic statistics… The need for analytics and reporting on web products is a must-have. Well, the easiest way to do that is to simply increment a database value each time. The problem is when those counts are coming in hundreds of times per second. Writes are the most expensive queries:

- Writes usually trigger updating an index.

- If you’re using a MyISAM storage engine, the table-level locking can get out of hand.

- Writes are not query-cacheable.

After observing subpar write behavior, I wanted to know just how many of our total writes were for updating statistics?

First, I ran mysqltuner.

% mysqltuner

...

[**] Reads / Writes: 93% / 7%

...

%

So 7% of all queries were writes. That wasn’t bad. Then, I took the binary log of all DML statements for yesterday, starting at midnight. I figured 24 hours was a good sample.

% mysqlbinlog --start-date='2010-06-06 0' binary-log.000152 > cow

I grepped out DML lines, to get rid of the binary log stuff.

% grep -i '^insert' cow > cow2

% grep -i '^update' cow >> cow2

I counted up lines that wrote to our stat tables.

% wc -l cow2

24898 cow

% grep -i -c 'stat_' cow2

20880

Doing the math: 20880 / 24898 = 0.86. About 86% of all writes to our database were for statistics. Which wasn’t too surprising. Most web sites must store and log a lot of data to know where to improve and how users are using the site.

So what do we do?

That’s the subject of another post, but the short answer is that these writes can be batched somehow. Whether the queries are batched with some sort of write-through cache, or job queues, the database won’t suffer from constant write queries.

The MySQL Slow Query Log is a required tool in the database administrator’s toolbox. It’s great for troubleshooting specific issues, but it’s also great for some rainy day application tuning.

My slow query log is in /var/lib/mysqld/db-001-slow.log and records any queries that take longer than 10 seconds (the default value for long_query_time). I can get information out of this log using mysqldumpslow.

Running `mysqldumpslow db-001-slow.log` prints out slow queries sorted by descending execution time. But that’s not useful to me, because any query can get delayed by a blip in the system.

I like running `mysqldumpslow -s c db-001-slow.log` which prints out the slow queries sorted by descending count of times that query occurred. Optimizing a query that takes 10 seconds to execute but occurs a dozen times every minute will be more beneficial than optimizing the query that takes 140 seconds to execute but rarely occurs.

The first time I tried this exercise, I revealed the following 3 types of slow queries (can’t remember the exact order now):

- Queries with lots of logic and joins returning infrequently-changing data.

- Queries using the

curdate()function, which are not query cacheable. - Queries to insert/update a stats table for content view counts.

For #1, I used an in-memory cache to cache the query results. For #2, I replaced the curdate() function with the PHP date() function everywhere I could find it. For #3, I noticed an extraneous index on the stats table, and indexes slow down inserts and updates, so I removed it. For more on handling these types of queries, see my next post.

Sometimes, I want to pop onto a database server, check the status of something, and then logout. So, for example, if I want to check on the number query cache free blocks, I run this long command:

% mysqladmin -u admin -p extended | grep -i qcache

Then I type in the password. Well, I grew tired of typing in the extra options, plus the password. Turns out, MySQL will look for the configuration file .my.cnf in your home directory after it looks in /etc/my.cnf (it looks in a few other places as well). So I put this in my ~/.my.cnf:

[client]

user=admin

password=secret

And now I can simply run:

% mysqladmin extended | grep -i qcache

and it works right away. Note that the password is stored in the clear.

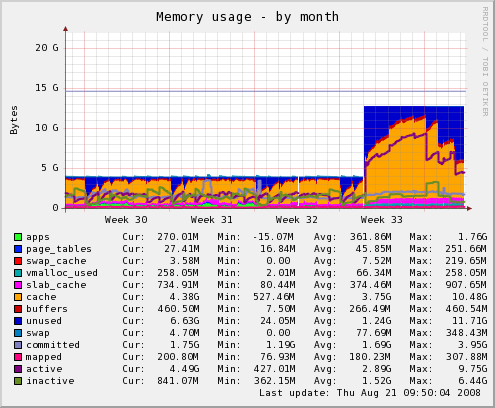

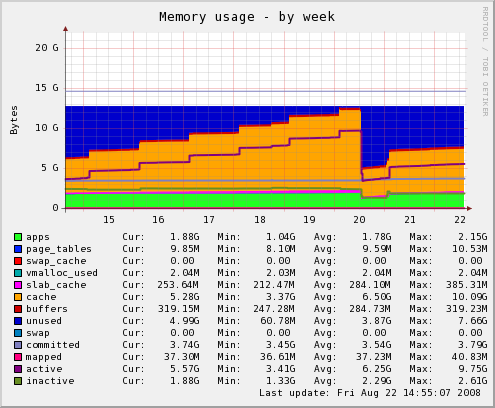

According to these Munin memory graphs, the large orange area is the OS buffer cache – a buffer the OS uses to cache plain ol’ file data on disk. The graph below shows one of our web servers after we upgraded its memory.

It makes sense that most of the memory not used by apps would be used by the OS to improve disk access. So seeing the memory graphs filled with orange is generally a good thing. After a few days, I watched the orange area grow and thought, “Great! LInux is putting all that extra memory to use.” I thought in my head that maybe it was caching images and CSS files to serve to Apache. But was that true?

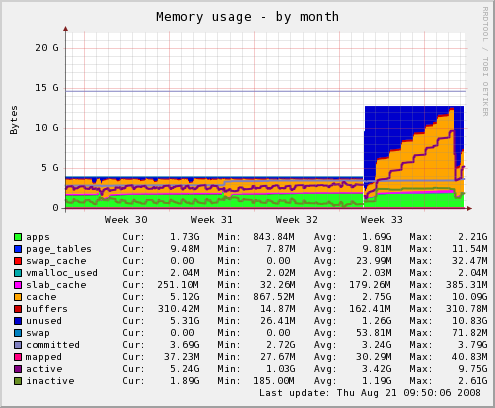

Looking At A Different Server

Here is a memory graph from one of our database servers after the RAM upgrade.

Again, I first thought that the OS was caching all that juicy database data from disk. The problem is that we don’t have 12GB of data, and that step pattern growth was suspiciously consistent.

Looking again at the web server graph, I saw giant downward spikes of blue color, where the buffer cache was emptied. (The blue is unused memory.) These occurred every day at 4 am, and on Sundays there’s a huge one. What happens every day at 4 am? The logs are rotated. And on Sundays, the granddaddy log of them all – the Apache log – is rotated.

The Problem

It was starting to make sense. Log files seem to take up most of the OS buffer cache on the web servers. Not optimal, I’m sure. And when they’re rotated, the data in the cache is invalidated and thus freed.

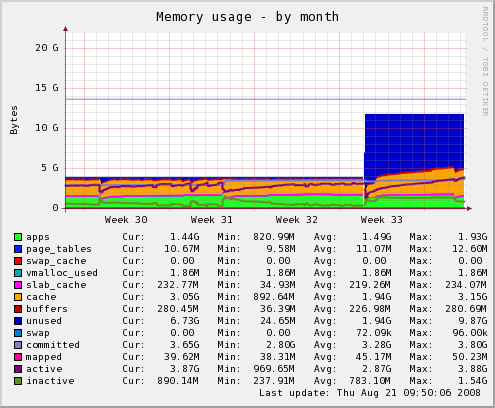

Here is a memory graph for one of our other database servers.

That step pattern growth is missing! In fact, most of RAM is unused. What is the difference between the first database server and this one? The first has the `mysqldump` backup. It occurs every night at 2:30 am, right when those step changes occur on its memory usage graph.

It was clear to me that most of the OS buffer cache was wasted on logs and backups and such. There had to be a way to tell the OS not to cache a file.

The Solution

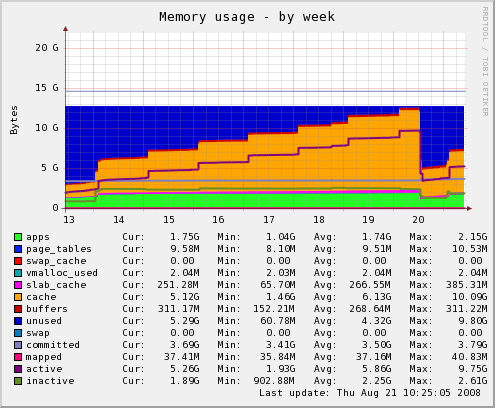

Google gave me this page: Improving Linux performance by preserving Buffer Cache State. I copied the little C program into a file and ran it on all the `mysqldump` backups. Here is the what happened to the memory usage.

Quite a bit of buffer cache was freed. On that night’s backup, I logged the buffer cache size before the backup and after.

% cat 2008.08.21.02.30.log

Starting at Thu Aug 21 02:30:03 EDT 2008

=========================================

Cached: 4490232 kB

Cached: 5350908 kB

=========================================

Ending at Thu Aug 21 02:30:55 EDT 2008

Just under a gigabyte increase in buffer cache size. What was the size of the new backup file?

% ll 2008.08.21.02.30.sql

-rw-r--r-- 1 root root 879727872 Aug 21 02:30 2008.08.21.02.30.sql

About 900MB.

Did It Work?

I used the C program on that page to ensure no database backups were cached by the OS. I did the same on the web servers in the logrotate config files. A couple days later, I checked the memory graph on the database server that performed the backup. Notice how the buffer cache did not fill up. It looked like the program worked, and the OS was free to cache more important things.

Since I knew that the MySQL Query Cache used the literal queries as keys, it made sense that MySQL did not cache queries with certain SQL functions in them, such as this one:

$sql = "select event_id from events where event_dt >= curdate()";

Because MySQL knows that this query run today is not the same query when it is run tomorrow. There are other SQL functions such as rand() and unix_timestamp() that will bypass the query cache. These are listed here.

So I avoid these functions when possible by calculating the value in PHP. For example, I’d rewrite the above query as:

$date = date('Y-m-d');

$sql = "select event_id from events where event_dt >= '$date'";