The MySQL Slow Query Log is a required tool in the database administrator’s toolbox. It’s great for troubleshooting specific issues, but it’s also great for some rainy day application tuning.

My slow query log is in /var/lib/mysqld/db-001-slow.log and records any queries that take longer than 10 seconds (the default value for long_query_time). I can get information out of this log using mysqldumpslow.

Running `mysqldumpslow db-001-slow.log` prints out slow queries sorted by descending execution time. But that’s not useful to me, because any query can get delayed by a blip in the system.

I like running `mysqldumpslow -s c db-001-slow.log` which prints out the slow queries sorted by descending count of times that query occurred. Optimizing a query that takes 10 seconds to execute but occurs a dozen times every minute will be more beneficial than optimizing the query that takes 140 seconds to execute but rarely occurs.

The first time I tried this exercise, I revealed the following 3 types of slow queries (can’t remember the exact order now):

- Queries with lots of logic and joins returning infrequently-changing data.

- Queries using the

curdate() function, which are not query cacheable.

- Queries to insert/update a stats table for content view counts.

For #1, I used an in-memory cache to cache the query results. For #2, I replaced the curdate() function with the PHP date() function everywhere I could find it. For #3, I noticed an extraneous index on the stats table, and indexes slow down inserts and updates, so I removed it. For more on handling these types of queries, see my next post.

I was reading up on memcached, when I came across some validation of the Pox Framework object model. In the FAQ, a general design approach to storing lists of data is described.

Storing lists of data into memcached can mean either storing a single item with a serialized array, or trying to manipulate a huge “collection” of data by adding, removing items without operating on the whole set. Both should be possible.

One thing to keep in mind is memcached’s 1 megabyte limit on item size, so storing the whole collection (ids, data) into memcached might not be the best idea.

Steven Grimm explains a better approach on the mailing list: http://lists.danga.com/pipermail/memcached/2007-July/004578.html

Following the link gives this quote:

A better way to deal with this kind of thing is with a two-phase fetch. So instead of directly caching an array of event data, instead cache an array of event IDs. Query that list, then use it construct a list of the keys of individual event objects you want to fetch, then multi-get that list of keys.

…Another advantage of a scheme like this is that you can update an item’s data without having to read then write every list that contains that item. Just update it by ID (like you’d do in your database queries) and all the lists that contain it will magically get the correct information.

That always feels nice.

Sometimes, I want to pop onto a database server, check the status of something, and then logout. So, for example, if I want to check on the number query cache free blocks, I run this long command:

% mysqladmin -u admin -p extended | grep -i qcache

Then I type in the password. Well, I grew tired of typing in the extra options, plus the password. Turns out, MySQL will look for the configuration file .my.cnf in your home directory after it looks in /etc/my.cnf (it looks in a few other places as well). So I put this in my ~/.my.cnf:

[client]

user=admin

password=secret

And now I can simply run:

% mysqladmin extended | grep -i qcache

and it works right away. Note that the password is stored in the clear.

Like most people, I did not know much about HTTP Keep-Alive headers other than that they could be very bad if used incorrectly. So I’ve kept them off, which is the default. But I ran across this blog post which explains the HTTP Keep-Alive, including its benefits and potential pitfalls pretty clearly.

It’s all pretty simple really. There is an overhead to opening and closing TCP connections. To alleviate this, Apache can agree to provide persistent connections by sending HTTP Keep-Alive headers. Then the browser can open a single connection to download multiple resources. But Apache won’t know when the browser is done downloading, so it simply keeps the connection open according to a Keep-Alive timeout, which is set to 15 seconds by default. The problem is the machine can only keep so many simultaneous requests open due to physical limitations (e.g. RAM, CPU, etc.) And 15 seconds is a long time.

To allow browsers to gain some parallelism on downloading files, without keeping persistent connections open too long, the Keep-Alive timeout value should be set to something very low, e.g. 2 seconds.

I’ve done this for static content only. Why only static content? It doesn’t really make much sense for the main page source itself since that’s the page the user wants to view.

I’ve mentioned before that by serving all static content on dedicated subdomains, we indirectly get the benefit of being able to optimize just those subdomains. So far, this meant:

- disabling

.htaccess files

- setting a far-future Expires: header

- avoiding setting cookies on the subdomain

Now we can add to the list: enabling HTTP Keep-Alive headers. The VirtualHost block might look like this now:

ServerName static0.yourdomain.com

ServerAlias static1.yourdomain.com

ServerAlias static2.yourdomain.com

ServerAlias static3.yourdomain.com

DocumentRoot /var/www/vhosts/yourdomain.com

KeepAlive On

KeepAliveTimeout 2

AllowOverride None

ExpiresActive On

ExpiresByType text/css "access plus 1 year"

ExpiresByType application/x-javascript "access plus 1 year"

ExpiresByType image/jpeg "access plus 1 year"

ExpiresByType image/gif "access plus 1 year"

ExpiresByType image/png "access plus 1 year"

Note the following applies to Windows Vista, but is probably easier on MacOS/Linux.

Is your hosts file becoming monstrous? Do you have an alias or shortcut to your hosts file because you edit it so often? Tired of manually adding every subdomain and domain you work on?

I was too when I thought there must be a better way. And there was.

The general idea is this: by installing a local DNS nameserver in BIND, we can set up local development domains that look like regular domains on the internet. For real domains, we’ll just forward the requests on to a real nameserver. This gives us a couple more benefits: 1) we can use the local nameserver as a caching nameserver to speed up DNS queries (in theory, I have not actually done this), and 2) we can choose to use any DNS service we wish, i.e. OpenDNS, or Google DNS.

Here are the steps.

- Follow these instructions on installing and configuring BIND and configuring a zone for your local domain.

- I installed BIND to

C:\Windows\system32\dns.

- Here is my

named.conf in its entirety.

options {

directory ";c:\windows\system32\dns\zones";

allow-transfer { none; };

forward only;

forwarders {

//208.67.222.222; // OpenDNS

//208.67.220.220;

8.8.8.8; // Google DNS

8.8.4.4;

};

query-source address * port 53;

};

/*

logging {

channel queries_log {

file "c:\windows\system32\dns\var\queries.log";

print-severity yes;

print-time yes;

};

category queries { queries_log ; };

};

*/

zone "work.local" IN {

type master;

file "work.local.txt";

};

key "rndc-key" {

algorithm hmac-md5;

secret "xxxxxxxxxxxxxxxxxxxxxxxx";

};

controls {

inet 127.0.0.1 port 953

allow { 127.0.0.1; } keys { "rndc-key"; };

};

- I created a zone file for my development domain work.local following this zone file example. Here is the zone file in its entirety. Note the

CNAME wildcard record.

$TTL 86400

@ IN SOA ns1.work.local. admin.work.local. (

2008102403

10800

3600

604800

86400 )

@ NS ns1.work.local.

IN A 127.0.0.1

ns1 IN A 127.0.0.1

www IN A 127.0.0.1

* IN CNAME www

- Start or restart the BIND service.

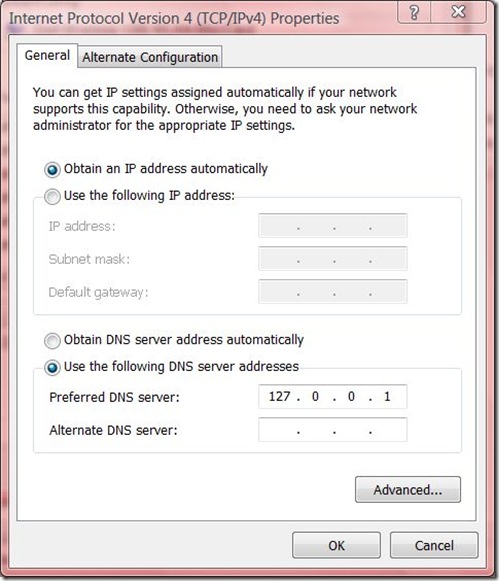

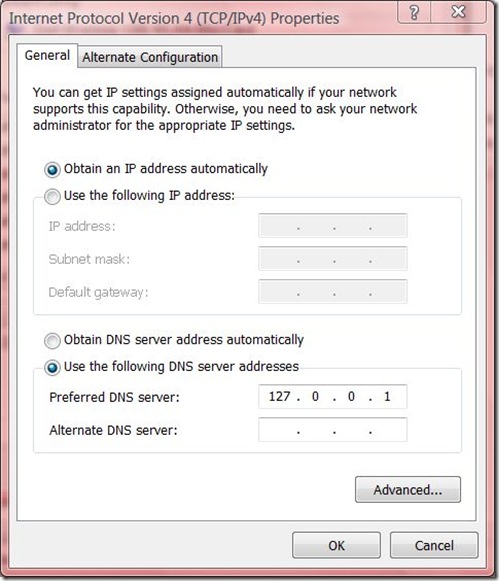

- Configure you network connection to use 127.0.0.1 as your primary nameserver, instead of DHCP. My IPv4 properties look like this:

- Flush the Windows DNS cache by running:

C:\> ipconfig /flushdns

- Test BIND by pinging

www.work.local. If you have errors, you can uncomment the logging block in named.conf.

- Once that is working, create a

VirtualHost in Apache for your development domain. Thanks to VirtualDocumentRoot, we can map any number of subdomains to project roots. Here is my VirtualHost block.

ServerName www.work.local

ServerAlias *.work.local

VirtualDocumentRoot "C:/_work/%1"

Options Indexes FollowSymLinks Includes ExecCGI

AllowOverride All

Order allow,deny

Allow from all

- Start or restart Apache.

- Create a directory in

C:\_work, for example, C:\_work\awesomeapp. Create a test index.html file in that directory.

- You should now be able to go to http://awesomeapp.work.local in your browser and see your index.html file!

Now, you should be able to repeat step 8 for any new website you create! No editing of hosts files, no bouncing the webserver! Just create the project directory and it’s immediately available.

One other important note: Firefox has its own DNS cache independent of the OS. For sanity, restarting Firefox resets its DNS cache. You can also permanently disable DNS caching in Firefox.