I’ve mentioned Apache Bench before. Httperf serves the same purpose as ab, but has a few more features, and has one very nice value-add.

While ab cannot really simulate a user visiting a website and performing multiple requests, httperf can. You can feed it a number of URL’s to visit, and specify how many requests to send within one session. You can also spread out requests over a time period randomly according a uniform or Poisson distribution, or a constant.

But the big value-add is autobench. Autobench is a perl wrapper around httperf for automating the process of load testing a web server. Autobench runs httperf a specified number of times against a URI, increasing the number of requests per second (which I equate to -c in ab) so that the response rate or the response time can be graphed vs. requests per second. (So response rate or response time on the vertical, and requests per second on the horizontal.)

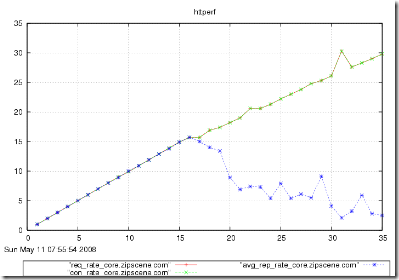

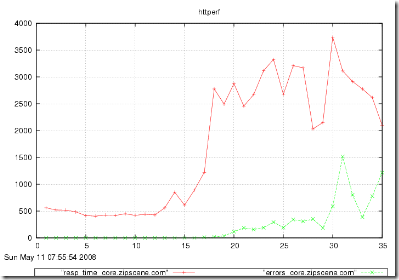

With this, you can generate pretty graphs like this:

From the graphs above, you could determine the approximate capacity of your website. In the first graph, the number of responses received was equal to the number of requests sent until 16 req/sec. At 16 req/sec., the number of responses starts going down as requests begin to error out. In the second graph, the response time stays level at about 500ms (a reflection of your code and database) until 15 req/sec. At 16 req/sec. the time goes up to nearly 1s, and at 17 req/sec. the response time is over a second. You would conclude that the capacity of this website is around 15 requests per second.

The people who provide autobench also offer an excellent HOWTO on benchmarking web servers in general.

Apache Bench is either the first or second most useful PHP tool (with Xdebug being the other). I described the basic theory of Apache Bench in an earlier post. That’s a short post, so I won’t repeat it. This will be another short post, with a small note on how I use it day-to-day. If you are changing something in the system, a piece of code, a database setting, an OS setting… anything! for performance reasons, and you want to see if it makes any difference, use Apache Bench. Fire up a quick test before the change, and after the change. ab runs very quickly (on the order of a few minutes on a slow machine), so you can run 1000 requests and not have to worry about your sample size. I even run it on my laptop. Even though my laptop introduces a lot of noise, it still gives relative results. I usually run it two ways before the change, and two ways after.

% ab -n 1000 -c 1 http://www.whatever.com

That usually gets me a good idea of improving performance.

% ab -c 100 -t 60 http://www.whatever.com

That usually gets me a good idea of scaling under load.

UPDATE: There have been reports that Apache Bench is not reliable.

When we talk about performance, it is the response time of a single request, web page, SQL query, etc. It is the actual execution time for something in the absence of load. To illustrate, suppose you wanted to test the performance of a web page using Apache Bench. You should run something like:

% ab -n 1000 -c 1 http://www.whatever.com

The -n is the number of requests and the -c is the number of concurrent requests. Since we’re interested in end-to-end response time, we only need one concurrent request. Scalability is usually about throughput, or the number of concurrent requests within a certain period of time. Using the example above, the Apache Bench command should be something like:

% ab -c 100 -t 60 http://www.whatever.com

The -t is the amount of time to run the test. We can vary -c until individual response times begin to grow, at which point something in the system has reached its maximum capacity.